BronyTales Under Fire - Chapter 1 - Trouble

Four days before the one-year anniversary of the first public opening of BronyTales, we experienced our first DDoS attack. Not just any random attack, though - someone with experience bypassing the specific DDoS protection system I had purchased. A mysterious nemesis, no motives or suspects, a server on fire, untraceable weapons, and one question through it all: when will the server be fixed?

A story about server administration, community management, computer forensics, and a touch of noir. These are the tales of Data, the sysadmin P.I.

Chapter 1: Trouble

The day is April 10th, 2020. Last week’s April Fools event was great fun, everything retro and extra Minty. PonyFest is winding up to have a second event. We first opened to the public 4-15-2019, and the current thread going around is what we should do for a 1-year anniversary later that week. Cake? Too Cliche. Party? We’re doing that for PonyFest. Someone else had other plans, though. Largely unnoticed, at precisely 3:07 AM, the first attack hits. It’s short-lived and mostly written off as a server hiccup. Due to an overzealous spam filter, I don’t get any notification that there was an attack.

5:11 PM. It’s Friday, and I’m freeeeee! Now I can stop doing server stuff for other people and do server stuff for other people! Wait.

5:15 PM. Second attack hits. Again, I don’t see a notification for it due to a spam filter, and just assume that the network issues people complain about are related to a player-side ISP issue.

5:24 PM. I’m now in my home office with a freshly heated plate of frozen corn dogs and ready to finish this server setup! As part of a planned server update, additional networking configurations need to be made. I’m finding some odd differences in the provided distribution of Debian and the Debian distro it’s supposed to be, and working out a hack to get the network settings I want to apply like they should.

5:51 PM. About this time I notice something a little odd about the server activity. Mind you, I’m still trying to work out how to trick the system into doing what I want it to do with network routing since the “official” method doesn’t work on this system. I have a screen in my office dedicated to showing the server console, and about right now it starts spamming a new error: the bot got knocked offline. Now this is especially strange, since that’s hosted on the same server as the Minecraft server, and the server has a gigabit connection. I assume it’s a discord service error and continue with my current task. I’ll look into it if it’s still broken when I’m done.

6:22 PM. I finally figure out a way to get the routing settings I want to apply to the server. It’s definitely a bit of a hack, but it works! Everything I throw at it is holding with no issues. Wait. Some issues? The Discord chat is getting a lot of people asking about that server.. it seems like now no one can join the Minecraft server? I try for myself, just to be sure, and I get the same error. Oh No! What did I DO!?

6:39 PM. The issue is intermittent, and I can’t find any problems in the server. I also can’t find any issues in the timings reports, either, but not because there aren’t any; I just can’t get the timings reports. The server fails to connect to the reporting server. Something is definitely wrong. In an effort to recover from whatever mystery bug is causing the issue, I start a server restart.

6:52 PM. The issue returns. At about this point I also decide to jump into the VC with everyone else in there. Partially to talk about the issues and try to figure out if there’s some detail I missed, and also to just hang out while trying to troubleshoot this issue.

7:43 PM. At this point, I’m beginning to wonder if it’s a DNS issue related to round-robin. I recently had a similar incident on another server environment, and would certainly explain why sometimes the Minecraft server would be unable to connect if there was a network issue with the datacenter’s DNS. I open up the resolv file to update the servers, but I’m already using a public DNS. Huh. Maybe the DNS record updated and the server is using a cached value?

8:03 PM. Changes made to the server and proxy host to clear the DNS cache, reboot the server again. Soon after this reboot the connections stabilize for a while, but I’m still finding odd glitches with occasional sockets timing out that shouldn’t be timing out.

11:08 PM. Connections get really bad again. This is where time breaks down for me, as I am at this point too tired to remember what I’m doing. Trying to fix a problem I thought I caused and in fact had nothing to do with while also being well past my regular bedtime is a bit stressful. Now, I don’t remember who all was in the voice chat, partially on account that my focus was elsewhere coupled with the low sleep, but I do remember two individuals. Tantabus, because he tends to stay up late anyway, and another individual I had never talked to before. Lots of questions this person had, including where the server was hosted in the world, the datacenter, programming APIs, network infrastructure, support tickets, swatting attacks, ddos attacks, XBox hacking, and VPNs, to name a few. I was personally very concerned when they mentioned that they once rented a server and launched a DDoS against their school, to which I gave a stern warning regarding that activity and subject. Attacking computer infrastructure that you do not own or have authorization to test is a federal criminal offense in the US. Don’t do it.

8:16 AM. I have been up for 27 consecutive hours, and have been working on this issue for 14 hours straight. My normal Friday bedtime was over ten hours ago. I am spent. Still thinking it to be a datacenter issue, I update the DNS for mc.bronytales.com to point to my home where I set up a temporary simple creative server that people can join and mess around on while I get some sleep. I exit the voice chat, go to my bedroom, put up my blackout curtains, put my phones on manual silent, and sleep soundly until 4 PM. Coincidentally, the DDoS attack stops right about 8:35 AM.

Meanwhile, the temp server is a weird mix of anarchy and order. Some people seem to think that a temporary server is reason to completely disregard server rules regarding lag machines and griefing. I spend the first hour or two after waking up just going through the discussions and issues I’ve missed, and also jumping on to see what everyone built. I fell in love with the rainbow dash model that someone made, and greatly enjoyed exploring the wacky builds that people filled the spawn area with. Seriously, you all are awesome - staff for helping keep order, and players for the awesome builds I got to wake up to. You are the reason I work to keep this server going.

My sleep cycle now in tatters and the main server currently offline, I figure I might as well use this downtime to finish the 1.15.1 -> 1.15.2 upgrade I had been meaning to do. When you have a few plugins to test, an upgrade is a minor nuisance that you might normally not worry about, particularly for a minor update like this. When you have over 100 plugins, though, that task becomes much more daunting. Especially if nearly half of them are either partially or fully custom-made. Lots of stuff tend to break over really small changes.

I work on getting the server updated for about ten hours. Minor blip happened at 2:43 AM when a DDoS hits the server for about 10 minutes, but no one was on the server - in fact, there wasn’t even a server running to connect to. Didn’t stop a particular someone from trying to ping it anyway. More on that later. spoilers…

I finish the upgrades and switch the server back on at 4:18 AM. I stick around for a while to make sure there weren’t any issues with the plugin upgrades that I missed. I did end up finding that there was one that got missed and got that fixed, but with no other issues found in the next ten minutes I logged off and stumbled off to bed at about 5 AM.

April 12th. Well, the daylight side of it, anyway. 7 AM. I am up much too early. I can destroy my sleep schedule and sleep when I can if I need, but Dog knows the time, and the time is time to go out. I let Dog out and the sun burns. I am a vampire now. I urge Dog to hurry up, eyes screwed shut and nursing my poor aching head. Outside done, I hastily return to my hidey cave to wait out the day.

1 PM-ish. Awake! Mostly. I take down the blackout curtains and dread the coming Monday. I am going to be sleepwalking at work at this rate, which is very much not a good thing when you have programming deadlines.

4:52 PM. Another DDoS Attack. Bot goes offline again. I start diving into the server again to try to figure out what the Minecraft server is having troubles with.

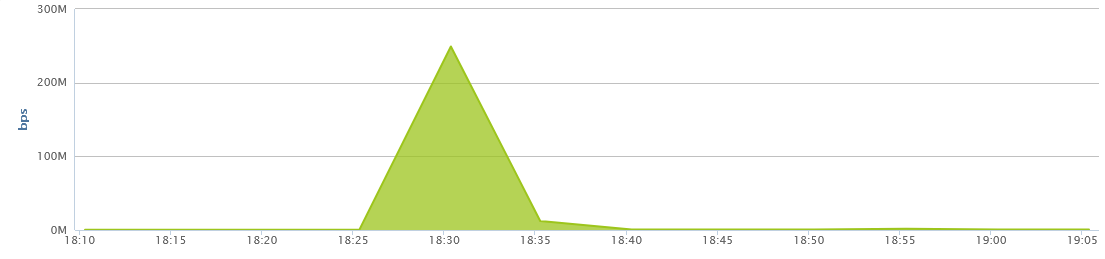

6:50 PM. People are still having problems getting logged in, and at this point I’m suspecting datacenter network issues. I jump on the server, download and install tshark (Wireshark for terminal), and grab a dump of the network traffic. Nothing too exciting at this point, except it seems that a number of ICMP packets are exceeding their TTL. From.. servers that I’m not pinging? That’s odd. I start looking at the network activity at this point, to see if there’s something unusual in the monitoring. I find something very interesting. A high spike in traffic.

Oh, it is ON

A story about server administration, community management, computer forensics, and a touch of noir. These are the tales of Data, the sysadmin P.I.

Chapter 1: Trouble

The day is April 10th, 2020. Last week’s April Fools event was great fun, everything retro and extra Minty. PonyFest is winding up to have a second event. We first opened to the public 4-15-2019, and the current thread going around is what we should do for a 1-year anniversary later that week. Cake? Too Cliche. Party? We’re doing that for PonyFest. Someone else had other plans, though. Largely unnoticed, at precisely 3:07 AM, the first attack hits. It’s short-lived and mostly written off as a server hiccup. Due to an overzealous spam filter, I don’t get any notification that there was an attack.

5:11 PM. It’s Friday, and I’m freeeeee! Now I can stop doing server stuff for other people and do server stuff for other people! Wait.

5:15 PM. Second attack hits. Again, I don’t see a notification for it due to a spam filter, and just assume that the network issues people complain about are related to a player-side ISP issue.

5:24 PM. I’m now in my home office with a freshly heated plate of frozen corn dogs and ready to finish this server setup! As part of a planned server update, additional networking configurations need to be made. I’m finding some odd differences in the provided distribution of Debian and the Debian distro it’s supposed to be, and working out a hack to get the network settings I want to apply like they should.

5:51 PM. About this time I notice something a little odd about the server activity. Mind you, I’m still trying to work out how to trick the system into doing what I want it to do with network routing since the “official” method doesn’t work on this system. I have a screen in my office dedicated to showing the server console, and about right now it starts spamming a new error: the bot got knocked offline. Now this is especially strange, since that’s hosted on the same server as the Minecraft server, and the server has a gigabit connection. I assume it’s a discord service error and continue with my current task. I’ll look into it if it’s still broken when I’m done.

6:22 PM. I finally figure out a way to get the routing settings I want to apply to the server. It’s definitely a bit of a hack, but it works! Everything I throw at it is holding with no issues. Wait. Some issues? The Discord chat is getting a lot of people asking about that server.. it seems like now no one can join the Minecraft server? I try for myself, just to be sure, and I get the same error. Oh No! What did I DO!?

6:39 PM. The issue is intermittent, and I can’t find any problems in the server. I also can’t find any issues in the timings reports, either, but not because there aren’t any; I just can’t get the timings reports. The server fails to connect to the reporting server. Something is definitely wrong. In an effort to recover from whatever mystery bug is causing the issue, I start a server restart.

6:52 PM. The issue returns. At about this point I also decide to jump into the VC with everyone else in there. Partially to talk about the issues and try to figure out if there’s some detail I missed, and also to just hang out while trying to troubleshoot this issue.

7:43 PM. At this point, I’m beginning to wonder if it’s a DNS issue related to round-robin. I recently had a similar incident on another server environment, and would certainly explain why sometimes the Minecraft server would be unable to connect if there was a network issue with the datacenter’s DNS. I open up the resolv file to update the servers, but I’m already using a public DNS. Huh. Maybe the DNS record updated and the server is using a cached value?

8:03 PM. Changes made to the server and proxy host to clear the DNS cache, reboot the server again. Soon after this reboot the connections stabilize for a while, but I’m still finding odd glitches with occasional sockets timing out that shouldn’t be timing out.

11:08 PM. Connections get really bad again. This is where time breaks down for me, as I am at this point too tired to remember what I’m doing. Trying to fix a problem I thought I caused and in fact had nothing to do with while also being well past my regular bedtime is a bit stressful. Now, I don’t remember who all was in the voice chat, partially on account that my focus was elsewhere coupled with the low sleep, but I do remember two individuals. Tantabus, because he tends to stay up late anyway, and another individual I had never talked to before. Lots of questions this person had, including where the server was hosted in the world, the datacenter, programming APIs, network infrastructure, support tickets, swatting attacks, ddos attacks, XBox hacking, and VPNs, to name a few. I was personally very concerned when they mentioned that they once rented a server and launched a DDoS against their school, to which I gave a stern warning regarding that activity and subject. Attacking computer infrastructure that you do not own or have authorization to test is a federal criminal offense in the US. Don’t do it.

8:16 AM. I have been up for 27 consecutive hours, and have been working on this issue for 14 hours straight. My normal Friday bedtime was over ten hours ago. I am spent. Still thinking it to be a datacenter issue, I update the DNS for mc.bronytales.com to point to my home where I set up a temporary simple creative server that people can join and mess around on while I get some sleep. I exit the voice chat, go to my bedroom, put up my blackout curtains, put my phones on manual silent, and sleep soundly until 4 PM. Coincidentally, the DDoS attack stops right about 8:35 AM.

Meanwhile, the temp server is a weird mix of anarchy and order. Some people seem to think that a temporary server is reason to completely disregard server rules regarding lag machines and griefing. I spend the first hour or two after waking up just going through the discussions and issues I’ve missed, and also jumping on to see what everyone built. I fell in love with the rainbow dash model that someone made, and greatly enjoyed exploring the wacky builds that people filled the spawn area with. Seriously, you all are awesome - staff for helping keep order, and players for the awesome builds I got to wake up to. You are the reason I work to keep this server going.

My sleep cycle now in tatters and the main server currently offline, I figure I might as well use this downtime to finish the 1.15.1 -> 1.15.2 upgrade I had been meaning to do. When you have a few plugins to test, an upgrade is a minor nuisance that you might normally not worry about, particularly for a minor update like this. When you have over 100 plugins, though, that task becomes much more daunting. Especially if nearly half of them are either partially or fully custom-made. Lots of stuff tend to break over really small changes.

I work on getting the server updated for about ten hours. Minor blip happened at 2:43 AM when a DDoS hits the server for about 10 minutes, but no one was on the server - in fact, there wasn’t even a server running to connect to. Didn’t stop a particular someone from trying to ping it anyway. More on that later. spoilers…

I finish the upgrades and switch the server back on at 4:18 AM. I stick around for a while to make sure there weren’t any issues with the plugin upgrades that I missed. I did end up finding that there was one that got missed and got that fixed, but with no other issues found in the next ten minutes I logged off and stumbled off to bed at about 5 AM.

April 12th. Well, the daylight side of it, anyway. 7 AM. I am up much too early. I can destroy my sleep schedule and sleep when I can if I need, but Dog knows the time, and the time is time to go out. I let Dog out and the sun burns. I am a vampire now. I urge Dog to hurry up, eyes screwed shut and nursing my poor aching head. Outside done, I hastily return to my hidey cave to wait out the day.

1 PM-ish. Awake! Mostly. I take down the blackout curtains and dread the coming Monday. I am going to be sleepwalking at work at this rate, which is very much not a good thing when you have programming deadlines.

4:52 PM. Another DDoS Attack. Bot goes offline again. I start diving into the server again to try to figure out what the Minecraft server is having troubles with.

6:50 PM. People are still having problems getting logged in, and at this point I’m suspecting datacenter network issues. I jump on the server, download and install tshark (Wireshark for terminal), and grab a dump of the network traffic. Nothing too exciting at this point, except it seems that a number of ICMP packets are exceeding their TTL. From.. servers that I’m not pinging? That’s odd. I start looking at the network activity at this point, to see if there’s something unusual in the monitoring. I find something very interesting. A high spike in traffic.

Oh, it is ON